Daniel Grzech1, Mohammad Farid Azampour1,2,3, Ben Glocker1, Julia Schnabel3,4, Nassir Navab3,5, Bernhard Kainz1,6, and Loïc Le Folgoc1

1 Imperial College London, 2 Sharif University of Technology, 3 Technische Universität München, 4 King’s College London, 5 Johns Hopkins University, 6 Friedrich-Alexander-Universität Erlangen-Nürnberg

We present a novel variational Bayesian formulation for diffeomorphic non-rigid registration of medical images, which learns in an unsupervised way a data-specific similarity metric. The proposed framework is general and may be used together with many existing image registration models. We evaluate it on brain MRI scans from the UK Biobank and show that use of the learnt similarity metric, which is parametrised as a neural network, leads to more accurate results than use of traditional functions to which we initialise the model, e.g. SSD and LCC, without a negative impact on image registration speed or transformation smoothness. In addition, the method estimates the uncertainty associated with the transformation.

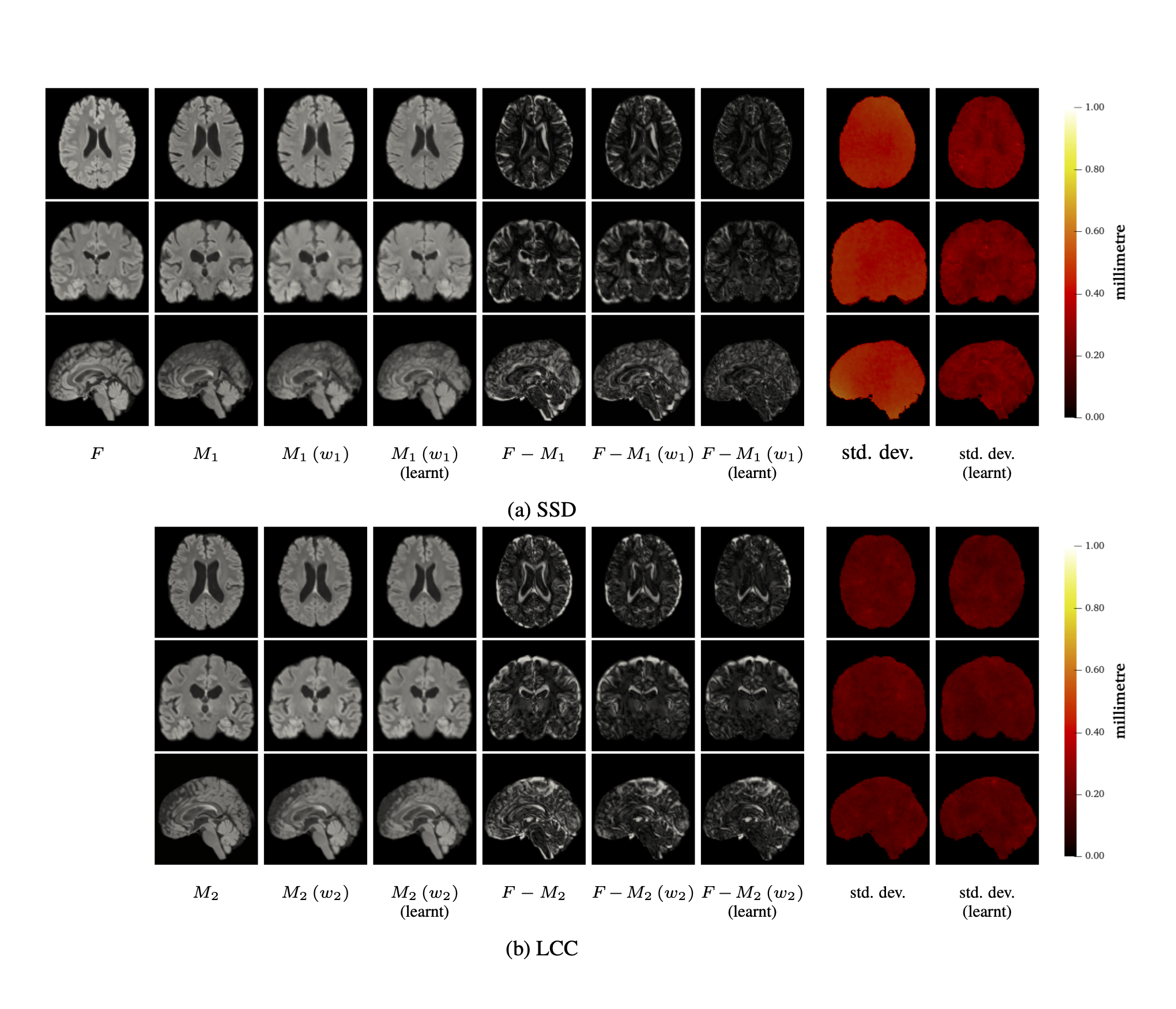

The output on two sample images in the test split when using the baseline and the learnt similarity metrics. In case of SSD, the average improvement in Dice scores over the baseline on the image above is approximately 27.2 percentage points and in case of LCC, it is approximately 6.5 percentage points. The uncertainty estimates are visualised as the standard deviation of the displacement field, based on 50 samples. Use of the learnt similarity metric which was initialised to SSD also results in better calibration of uncertainty estimates than in case of the baseline.

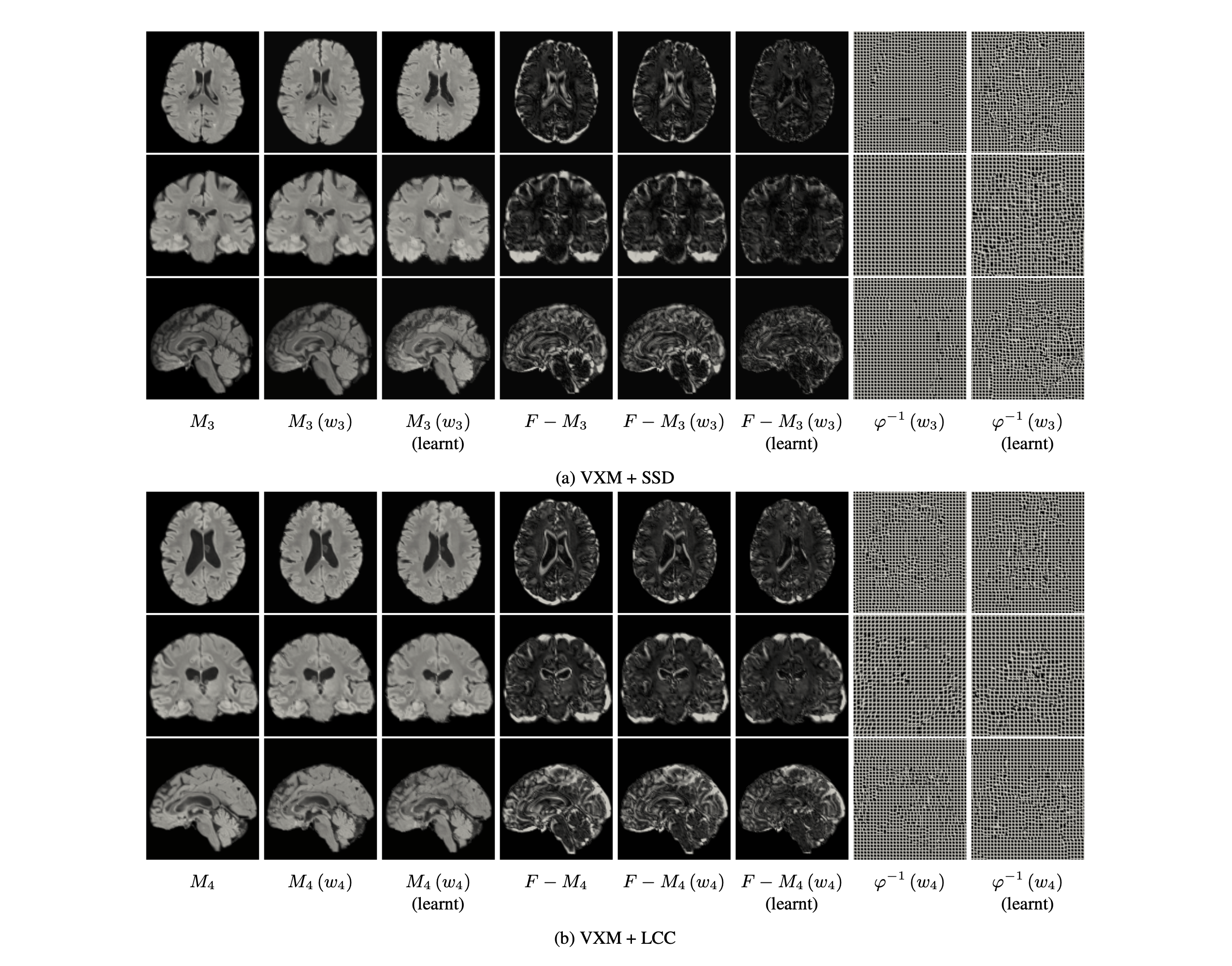

The output on two sample images in the test split when using VoxelMorph trained with the baseline and the learnt similarity metrics. In order to make the comparison fair, we use the exact same hyperparameter values for VoxelMorph trained with the baseline and the learnt similarity metrics. In case of VoxelMorph + SSD, the average improvement in Dice scores over the baseline on the image above is approximately 25.3 percentage points and in case of LCC, it is approximately 11.8 percentage points.

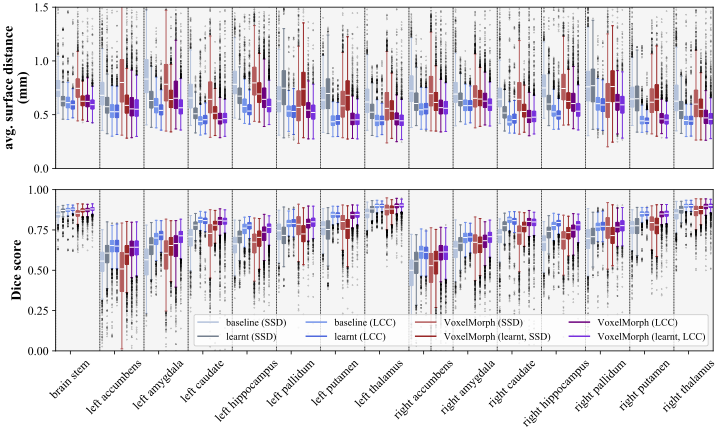

Average surface distances and Dice scores calculated on subcortical structure segmentations when aligning images in the test split using the baseline and learnt similarity metrics. The learnt models show clear improvement over the baselines. We provide details on the statistical significance of the improvement in the paper.

Daniel Grzech, Mohammad Farid Azampour, Ben Glocker, Julia Schnabel, Nassir Navab, Bernhard Kainz, and Loïc Le Folgoc. A variational Bayesian method for similarity learning in non-rigid image registration. CVPR 2022.

Click here for a .bib file.